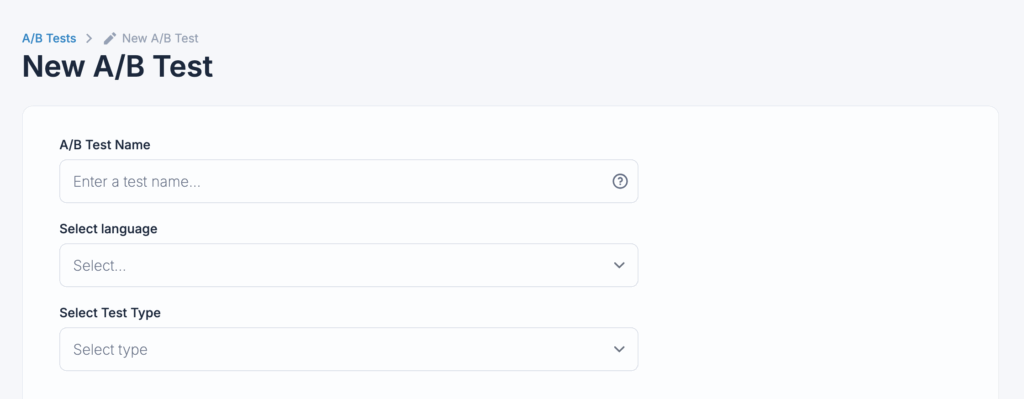

Step 1: Set test name and select language for AB test

Step 2: Identify the Test Variables

You start by selecting which element you want to test. These could be:

- Search Pipelines → Comparing different ways to display products (grid vs. list)., or test which Search piplene works best

- Recommendations → Testing different methods of personalizing product suggestions.

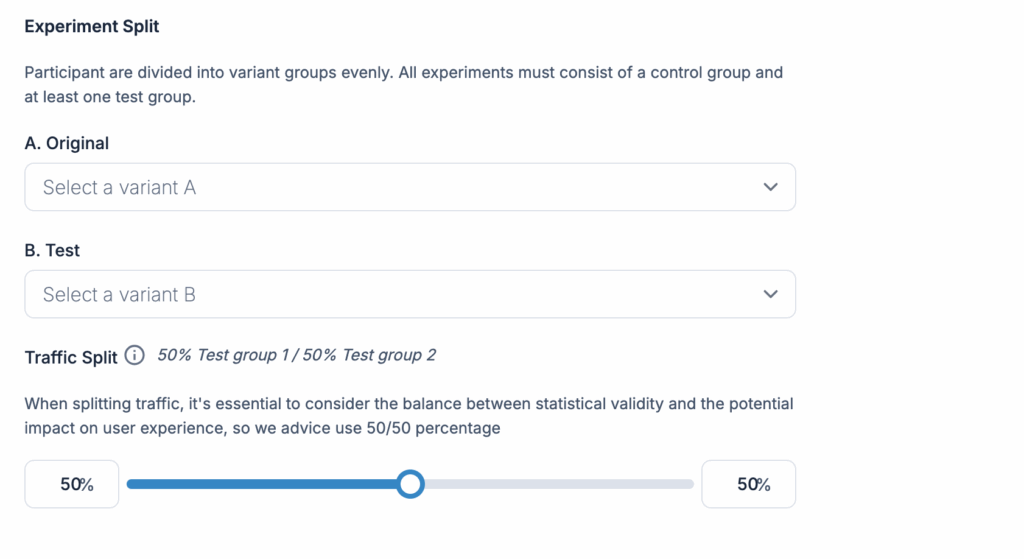

Step 3: Split the Audience

The audience is randomly divided into two (or more) groups, with each group seeing a different version (A or B). For example:

- Group A might see Version A of a product page or recommendation.

- Group B might see Version B.

Set traffic split, between the groups. Depending on the percentage split, each group gets a portion of the total traffic. For example:

- 50/50 Split → Half of the users see Variation A and half see Variation B.

- 80/20 Split → 80% of traffic goes to Variation A, while 20% is allocated to Variation B (often used if one version is considered more important or needs more data).

When splitting traffic, it’s essential to consider the balance between statistical validity and the potential impact on user experience, so we advice use 50/50 percentage

Step 4: Run the Test & Collect Data

Run the test for a specified period (days, weeks) to gather enough data for statistical accuracy. AI algorithms analyze and compare the performance metrics of the two groups.

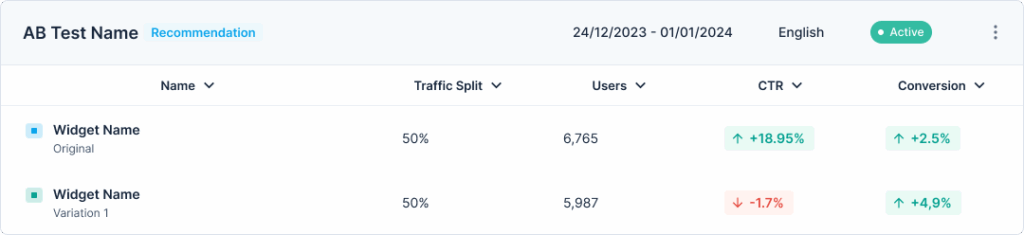

Step 5: Analyze Results & Apply Insights

Once the test is complete, the AI determines which version performed better based on your success metrics. The winning version is then used moving forward.

- CTR → Which version of the page gets more clicks.

- CVR → Which variation leads to more purchases.

- Revenue per Session → Which variant generates more revenue per user.

- User Engagement → Which version keeps users browsing longer.